Gateway architecture

Learn about the architecture and design principles of NGINX Gateway Fabric.

The intended audience for this information is primarily the two following groups:

- Cluster Operators who would like to know how the software works and understand how it can fail.

- Developers who would like to contribute to the project.

The reader needs to be familiar with core Kubernetes concepts, such as pods, deployments, services, and endpoints. For an understanding of how NGINX itself works, you can read the “Inside NGINX: How We Designed for Performance & Scale” blog post.

Overview

NGINX Gateway Fabric is an open source project that provides an implementation of the Gateway API using NGINX as the data plane. The goal of this project is to implement the core Gateway APIs – Gateway, GatewayClass, HTTPRoute, GRPCRoute, TCPRoute, TLSRoute, and UDPRoute – to configure an HTTP or TCP/UDP load balancer, reverse proxy, or API gateway for applications running on Kubernetes. NGINX Gateway Fabric supports a subset of the Gateway API.

For a list of supported Gateway API resources and features, see the Gateway API Compatibility documentation.

We have more information regarding our design principles in the project’s GitHub repository.

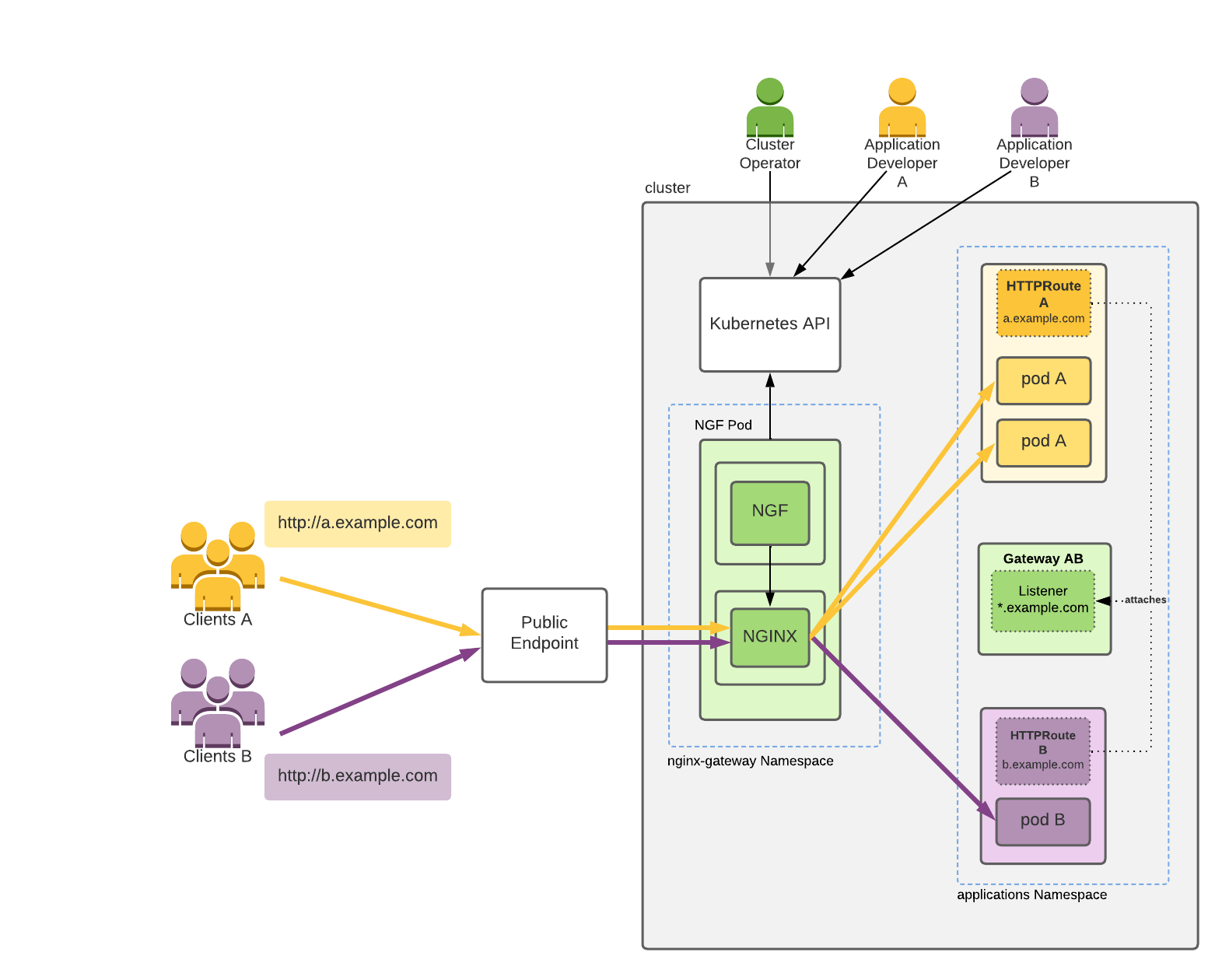

NGINX Gateway Fabric at a high level

This figure depicts an example of NGINX Gateway Fabric exposing two web applications within a Kubernetes cluster to clients on the internet:

Note:

The figure does not show many of the necessary Kubernetes resources the Cluster Operators and Application Developers need to create, like deployment and services.

The figure shows:

- A Kubernetes cluster.

- Users Cluster Operator, Application Developer A and Application Developer B. These users interact with the cluster through the Kubernetes API by creating Kubernetes objects.

- Clients A and Clients B connect to Applications A and B, respectively, which they have deployed.

- The NGF Pod, deployed by Cluster Operator in the namespace nginx-gateway. For scalability and availability, you can have multiple replicas. This pod consists of two containers:

NGINXandNGF. The NGF container interacts with the Kubernetes API to retrieve the most up-to-date Gateway API resources created within the cluster. It then dynamically configures the NGINX container based on these resources, ensuring proper alignment between the cluster state and the NGINX configuration. - Gateway AB, created by Cluster Operator, requests a point where traffic can be translated to Services within the cluster. This Gateway includes a listener with a hostname

*.example.com. Application Developers have the ability to attach their application’s routes to this Gateway if their application’s hostname matches*.example.com. - Application A with two pods deployed in the applications namespace by Application Developer A. To expose the application to its clients (Clients A) via the host

a.example.com, Application Developer A creates HTTPRoute A and attaches it toGateway AB. - Application B with one pod deployed in the applications namespace by Application Developer B. To expose the application to its clients (Clients B) via the host

b.example.com, Application Developer B creates HTTPRoute B and attaches it toGateway AB. - Public Endpoint, which fronts the NGF pod. This is typically a TCP load balancer (cloud, software, or hardware) or a combination of such load balancer with a NodePort service. Clients A and B connect to their applications via the Public Endpoint.

The yellow and purple arrows represent connections related to the client traffic, and the black arrows represent access to the Kubernetes API. The resources within the cluster are color-coded based on the user responsible for their creation.

For example, the Cluster Operator is denoted by the color green, indicating they create and manage all the green resources.

The NGINX Gateway Fabric pod

NGINX Gateway Fabric consists of two containers:

nginx: the data plane. Consists of an NGINX master process and NGINX worker processes. The master process controls the worker processes. The worker processes handle the client traffic and load balance traffic to the backend applications.nginx-gateway: the control plane. Watches Kubernetes objects and configures NGINX.

These containers are deployed in a single pod as a Kubernetes Deployment.

The nginx-gateway, or the control plane, is a Kubernetes controller, written with the controller-runtime library. It watches Kubernetes objects (services, endpoints, secrets, and Gateway API CRDs), translates them to NGINX configuration, and configures NGINX.

This configuration happens in two stages:

- NGINX configuration files are written to the NGINX configuration volume shared by the

nginx-gatewayandnginxcontainers. - The control plane reloads the NGINX process.

This is possible because the two containers share a process namespace, allowing the NGINX Gateway Fabric process to send signals to the NGINX main process.

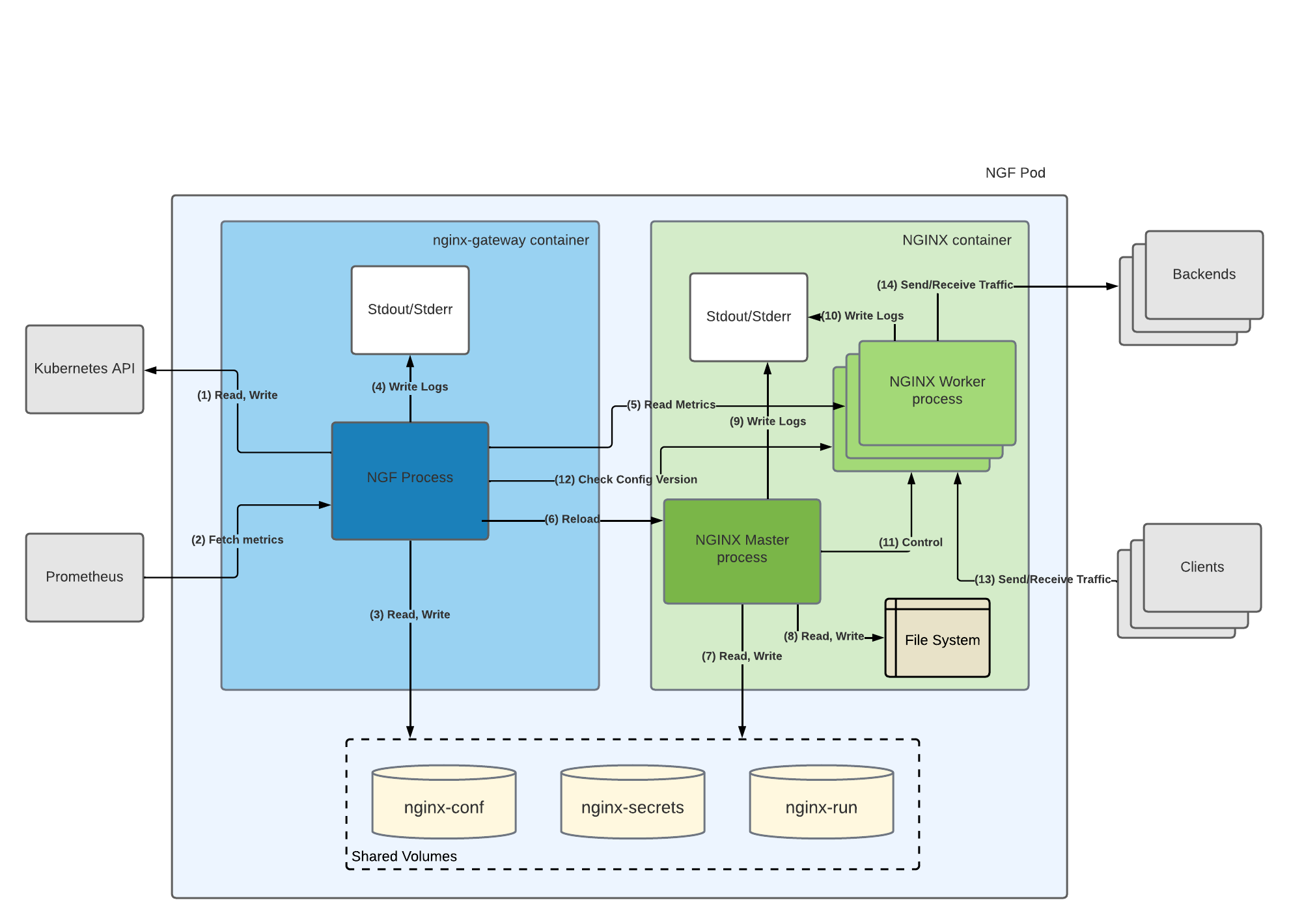

The following diagram represents the connections, relationships and interactions between process with the nginx and nginx-gateway containers, as well as external processes/entities.

The following list describes the connections, preceeded by their types in parentheses. For brevity, the suffix “process” has been omitted from the process descriptions.

- (HTTPS)

- Read: NGF reads the Kubernetes API to get the latest versions of the resources in the cluster.

- Write: NGF writes to the Kubernetes API to update the handled resources’ statuses and emit events. If there’s more than one replica of NGF and leader election is enabled, only the NGF pod that is leading will write statuses to the Kubernetes API.

- (HTTP, HTTPS) Prometheus fetches the

controller-runtimeand NGINX metrics via an HTTP endpoint that NGF exposes (:9113/metricsby default). Prometheus is not required by NGINX Gateway Fabric, and its endpoint can be turned off. - (File I/O)

- Write: NGF generates NGINX configuration based on the cluster resources and writes them as

.conffiles to the mountednginx-confvolume, located at/etc/nginx/conf.d. It also writes TLS certificates and keys from TLS secrets referenced in the accepted Gateway resource to thenginx-secretsvolume at the path/etc/nginx/secrets. - Read: NGF reads the PID file

nginx.pidfrom thenginx-runvolume, located at/var/run/nginx. NGF extracts the PID of the nginx process from this file in order to send reload signals to NGINX master.

- Write: NGF generates NGINX configuration based on the cluster resources and writes them as

- (File I/O) NGF writes logs to its stdout and stderr, which are collected by the container runtime.

- (HTTP) NGF fetches the NGINX metrics via the unix:/var/run/nginx/nginx-status.sock UNIX socket and converts it to Prometheus format used in #2.

- (Signal) To reload NGINX, NGF sends the reload signal to the NGINX master.

- (File I/O)

- Write: The NGINX master writes its PID to the

nginx.pidfile stored in thenginx-runvolume. - Read: The NGINX master reads configuration files and the TLS cert and keys referenced in the configuration when it starts or during a reload. These files, certificates, and keys are stored in the

nginx-confandnginx-secretsvolumes that are mounted to both thenginx-gatewayandnginxcontainers.

- Write: The NGINX master writes its PID to the

- (File I/O)

- Write: The NGINX master writes to the auxiliary Unix sockets folder, which is located in the

/var/run/nginxdirectory. - Read: The NGINX master reads the

nginx.conffile from the/etc/nginxdirectory. This file contains the global and http configuration settings for NGINX. In addition, NGINX master reads the NJS modules referenced in the configuration when it starts or during a reload. NJS modules are stored in the/usr/lib/nginx/modulesdirectory.

- Write: The NGINX master writes to the auxiliary Unix sockets folder, which is located in the

- (File I/O) The NGINX master sends logs to its stdout and stderr, which are collected by the container runtime.

- (File I/O) An NGINX worker writes logs to its stdout and stderr, which are collected by the container runtime.

- (Signal) The NGINX master controls the lifecycle of NGINX workers it creates workers with the new configuration and shutdowns workers with the old configuration.

- (HTTP) To consider a configuration reload a success, NGF ensures that at least one NGINX worker has the new configuration. To do that, NGF checks a particular endpoint via the unix:/var/run/nginx/nginx-config-version.sock UNIX socket.

- (HTTP, HTTPS) A client sends traffic to and receives traffic from any of the NGINX workers on ports 80 and 443.

- (HTTP, HTTPS) An NGINX worker sends traffic to and receives traffic from the backends.

Below are additional connections not depcited on the diagram:

- (HTTPS) NGF sends product telemetry data to the F5 telemetry service.

Differences with NGINX Plus

The previous diagram depicts NGINX Gateway Fabric using NGINX Open Source. NGINX Gateway Fabric with NGINX Plus has the following difference:

- An admin can connect to the NGINX Plus API using port 8765. NGINX only allows connections from localhost.

Updating upstream servers

The normal process to update any changes to NGINX is to write the configuration files and reload NGINX. However, when using NGINX Plus, we can take advantage of the NGINX Plus API to limit the amount of reloads triggered when making changes to NGINX. Specifically, when the endpoints of an application in Kubernetes change (Such as scaling up or down), the NGINX Plus API is used to update the upstream servers in NGINX with the new endpoints without a reload. This reduces the potential for a disruption that could occur when reloading.

Pod readiness

The nginx-gateway container includes a readiness endpoint available through the path /readyz. A readiness probe periodically checks the endpoint on startup, returning a 200 OK response when the pod can accept traffic for the data plane. Once the control plane successfully starts, the pod becomes ready.

If there are relevant Gateway API resources in the cluster, the control plane will generate the first NGINX configuration and successfully reload NGINX before the pod is considered ready.