The design of NGINX Ingress Controller

This document explains how the F5 NGINX Ingress Controller is designed, and how it differs when using NGINX or NGINX Plus.

The intended audience for this information is primarily the two following groups:

- Operators who want to know how the software works and understand how it can fail.

- Developers who want to contribute to the project.

We assume that the reader is familiar with core Kubernetes concepts, such as Pods, Deployments, Services, and Endpoints. For an understanding of how NGINX itself works, you can read the “Inside NGINX: How We Designed for Performance & Scale” blog post.

For conciseness in diagrams, NGINX Ingress Controller is often labelled “IC” on this page.

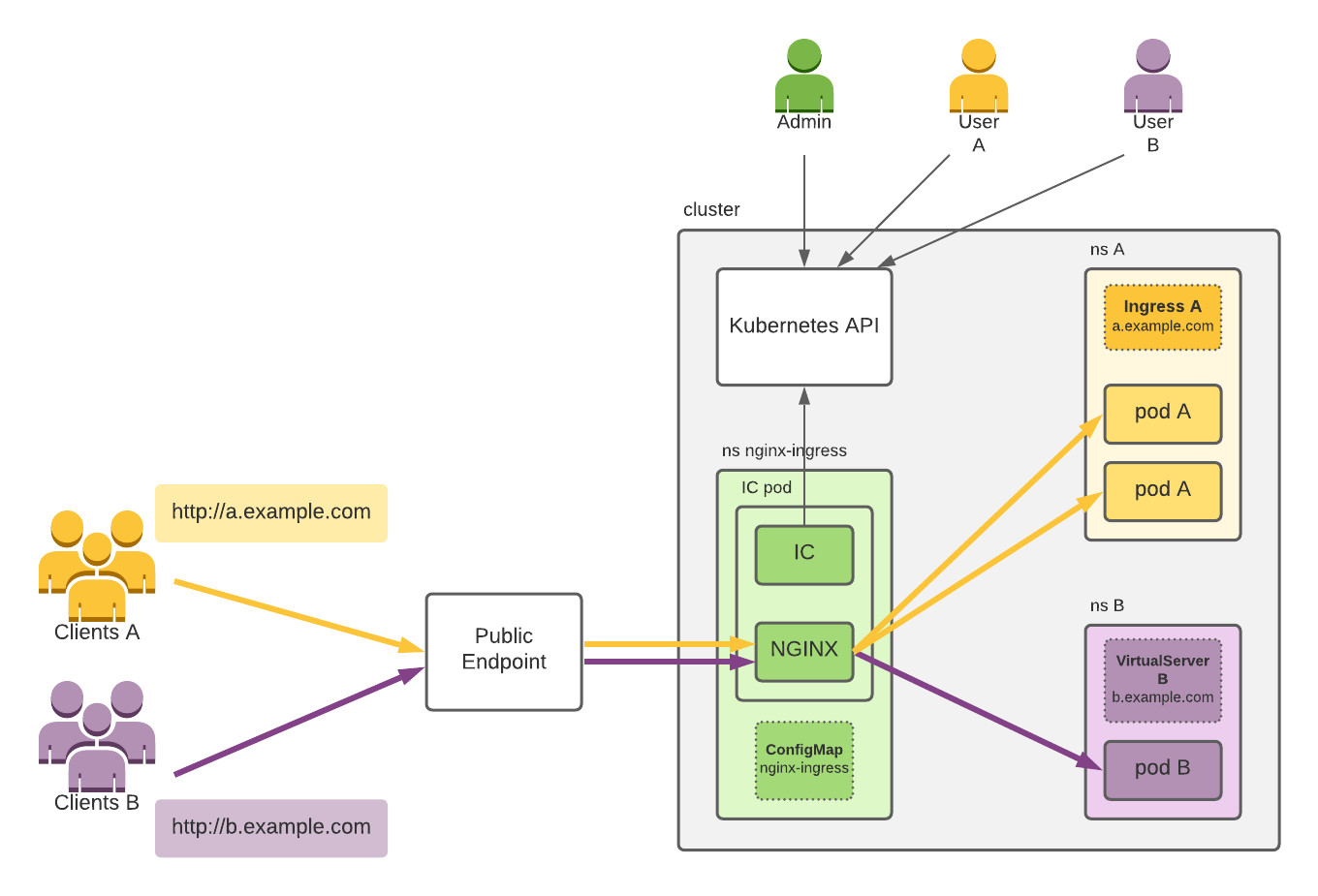

NGINX Ingress Controller at a high level

This figure depicts an example of NGINX Ingress Controller exposing two web applications within a Kubernetes cluster to clients on the internet:

Note:

For simplicity, necessary Kubernetes resources like Deployments and Services aren’t shown, which Admin and the users also need to create.

The figure shows:

- A Kubernetes cluster.

- Cluster users Admin, User A and User B, which use the cluster via the Kubernetes API.

- Clients A and Clients B, which connect to the Applications A and B deployed by the corresponding users.

- NGINX Ingress Controller, deployed in a pod with the namespace nginx-ingress and configured using the ConfigMap resource nginx-ingress. A single pod is depicted; at least two pods are typically deployed for redundancy. NGINX Ingress Controller uses the Kubernetes API to get the latest Ingress resources created in the cluster and then configures NGINX according to those resources.

- Application A with two pods deployed in the namespace A by User A. To expose the application to its clients (Clients A) via the host

a.example.com, User A creates Ingress A. - Application B with one pod deployed in the namespace B by User B. To expose the application to its clients (Clients B) via the host

b.example.com, User B creates VirtualServer B. - Public Endpoint, which fronts the NGINX Ingress Controller pod(s). This is typically a standalone TCP load balancer (Cloud, software, or hardware) or a combination of a load balancer with a NodePort service. Clients A and B connect to their applications via the Public Endpoint.

The yellow and purple arrows represent connections related to the client traffic, and the black arrows represent access to the Kubernetes API.

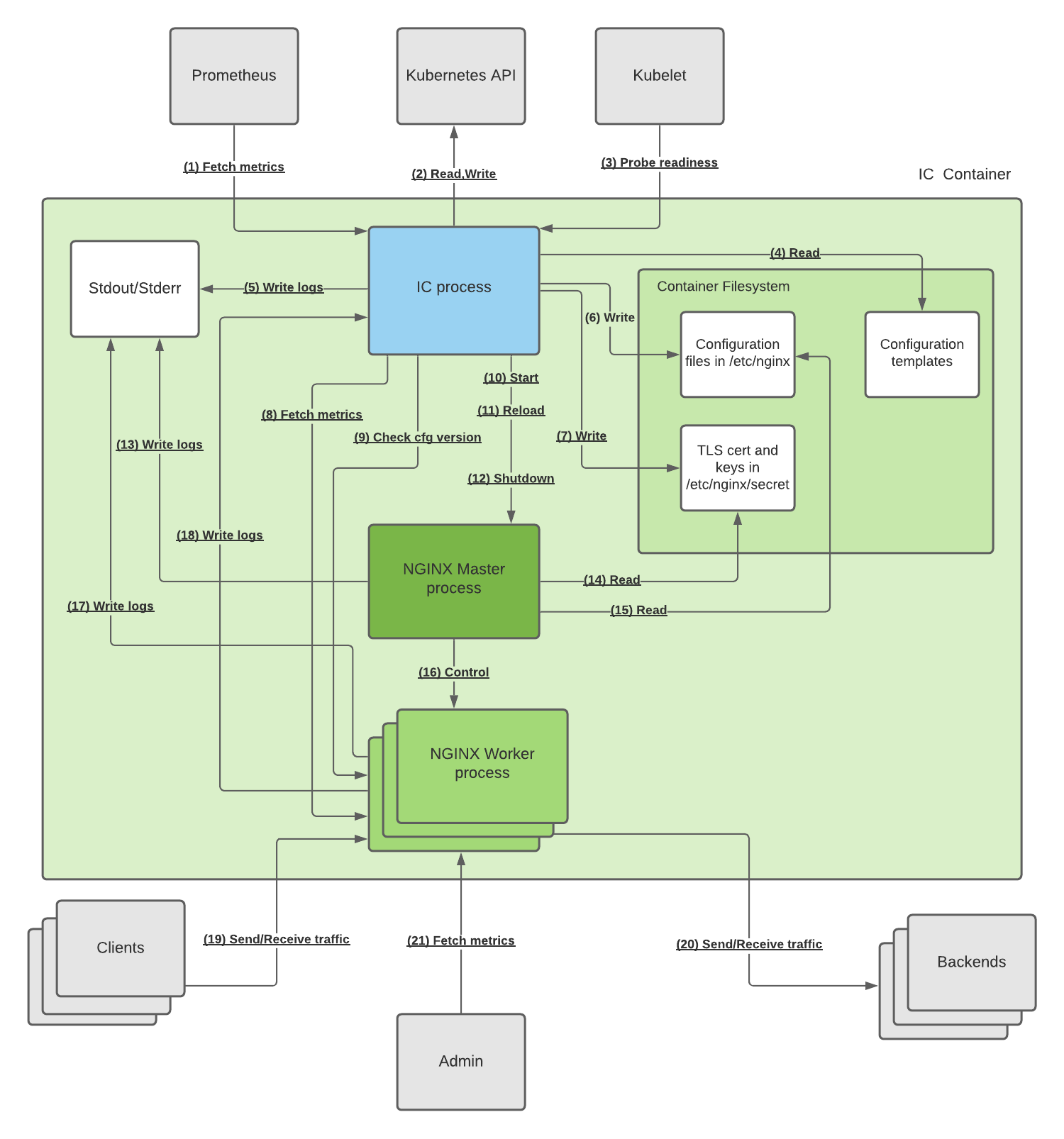

The NGINX Ingress Controller pod

The NGINX Ingress Controller pod consists of a single container, which includes the following:

- The NGINX Ingress Controller process, which configures NGINX according to Ingress and other resources created in the cluster.

- The NGINX master process, which controls NGINX worker processes.

- NGINX worker processes, which handle the client traffic and load balance the traffic to the backend applications.

The following is an architectural diagram depicting how those processes interact together and with some external entities:

This table describes each connection, starting with its type:

| # | Protocols | Description |

|---|---|---|

| 1 | HTTP | Prometheus fetches NGINX Ingress Controller and NGINX metrics with an NGINX Ingress Controller HTTP endpoint (Default :9113/metrics). Note: Prometheus is not required and the endpoint can be turned off. |

| 2 | HTTPS | NGINX Ingress Controller reads the Kubernetes API for the latest versions of the resources in the cluster and writes to the API to update the handled resources’ statuses and emit events. |

| 3 | HTTP | Kubelet checks the NGINX Ingress Controller readiness probe (Default :8081/nginx-ready) to consider the NGINX Ingress Controller pod ready. |

| 4 | File I/O | When NGINX Ingress Controller starts, it reads the configuration templates from the filesystem necessary for configuration generation. The templates are located in the / directory of the container and have the .tmpl extension |

| 5 | File I/O | NGINX Ingress Controller writes logs to stdout and stderr, which are collected by the container runtime. |

| 6 | File I/O | NGINX Ingress Controller generates NGINX configuration based on the resources created in the cluster (See NGINX Ingress Controller is a Kubernetes Controller) and writes it on the filesystem in the /etc/nginx folder. The configuration files have a .conf extension. |

| 7 | File I/O | NGINX Ingress Controller writes TLS certificates and keys from any TLS Secrets referenced in the Ingress and other resources to the filesystem. |

| 8 | HTTP | NGINX Ingress Controller fetches the NGINX metrics via the unix:/var/lib/nginx/nginx-status.sock UNIX socket and converts it to Prometheus format used in #1. |

| 9 | HTTP | To verify a successful configuration reload, NGINX Ingress Controller ensures at least one NGINX worker has the new configuration. To do that, the IC checks a particular endpoint via the unix:/var/lib/nginx/nginx-config-version.sock UNIX socket. |

| 10 | N/A | To start NGINX, NGINX Ingress Controller runs the nginx command, which launches the NGINX master. |

| 11 | Signal | To reload NGINX, the NGINX Ingress Controller runs the nginx -s reload command, which validates the configuration and sends the reload signal to the NGINX master. |

| 12 | Signal | To shutdown NGINX, the NGINX Ingress Controller executes nginx -s quit command, which sends the graceful shutdown signal to the NGINX master. |

| 13 | File I/O | The NGINX master sends logs to its stdout and stderr, which are collected by the container runtime. |

| 14 | File I/O | The NGINX master reads the TLS cert and keys referenced in the configuration when it starts or reloads. |

| 15 | File I/O | The NGINX master reads configuration files when it starts or during a reload. |

| 16 | Signal | The NGINX master controls the lifecycle of NGINX workers it creates workers with the new configuration and shutdowns workers with the old configuration. |

| 17 | File I/O | An NGINX worker writes logs to its stdout and stderr, which are collected by the container runtime. |

| 18 | UDP | An NGINX worker sends the HTTP upstream server response latency logs via the Syslog protocol over the UNIX socket /var/lib/nginx/nginx-syslog.sock to NGINX Ingress Controller. In turn, NGINX Ingress Controller analyzes and transforms the logs into Prometheus metrics. |

| 19 | HTTP,HTTPS,TCP,UDP | A client sends traffic to and receives traffic from any of the NGINX workers on ports 80 and 443 and any additional ports exposed by the GlobalConfiguration resource. |

| 20 | HTTP,HTTPS,TCP,UDP | An NGINX worker sends traffic to and receives traffic from the backends. |

| 21 | HTTP | Admin can connect to the NGINX stub_status using port 8080 via an NGINX worker. By default, NGINX only allows connections from localhost. |

Differences with NGINX Plus

The previous diagram depicts NGINX Ingress Controller using NGINX. NGINX Ingress Controller with NGINX Plus has the following differences:

- To configure NGINX Plus, NGINX Ingress Controller uses configuration reloads and the NGINX Plus API. This allows NGINX Ingress Controller to dynamically change the upstream servers.

- Instead of the stub status metrics, the extended metrics available from the NGINX Plus API are used.

- In addition to TLS certs and keys, NGINX Ingress Controller writes JWKs from the secrets of the type

nginx.org/jwk, and NGINX workers read them.

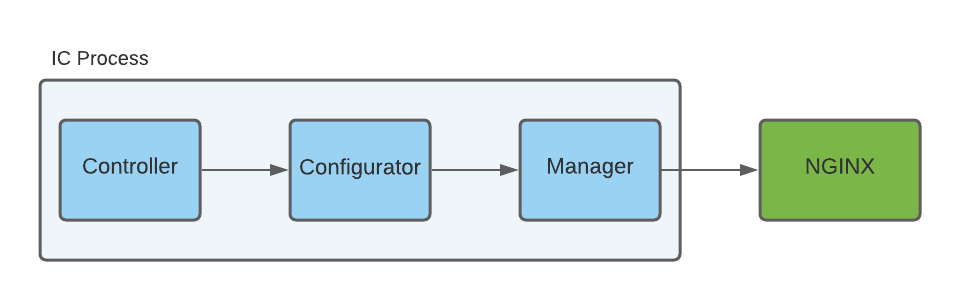

The NGINX Ingress Controller process

This section covers the architecture of the NGINX Ingress Controller process, including:

- How NGINX Ingress Controller processes a new Ingress resource created by a user.

- A summary of how NGINX Ingress Controller works in relation to others Kubernetes Controllers.

- The different components of the IC process.

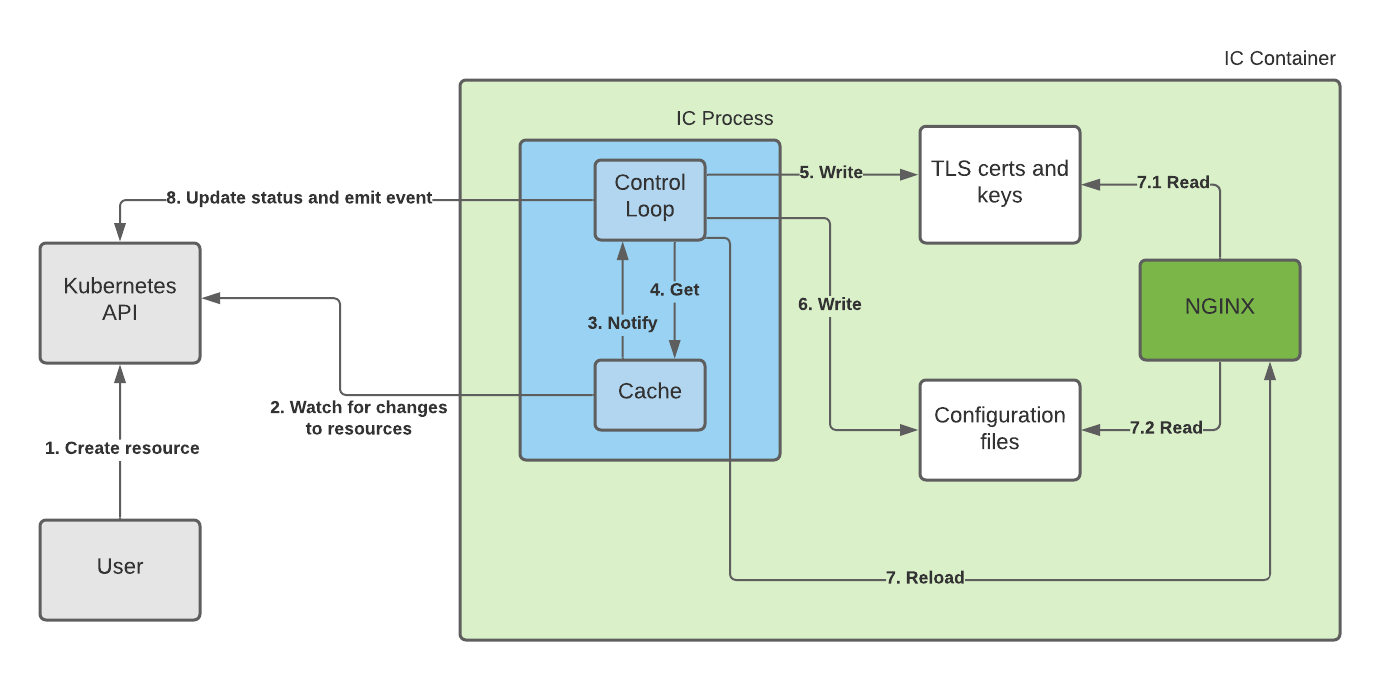

Processing a new Ingress resource

The following diagram depicts how NGINX Ingress Controller processes a new Ingress resource. The the NGINX master and worker processes are represented as a single rectangle, NGINX for simplicity. VirtualServer and VirtualServerRoute resources are indicated similarly.

Processing a new Ingress resource involves the following steps: each step corresponds to the arrow on the diagram with the same number:

- User creates a new Ingress resource.

- The NGINX Ingress Controller process has a Cache of the resources in the cluster. The Cache includes only the resources NGINX Ingress Controller is concerned with such as Ingresses. The Cache stays in sync with the Kubernetes API by watching for changes to the resources.

- Once the Cache has the new Ingress resource, it notifies the Control Loop about the changed resource.

- The Control Loop gets the latest version of the Ingress resource from the Cache. Since the Ingress resource references other resources, such as TLS Secrets, the Control loop gets the latest versions of those referenced resources as well.

- The Control Loop generates TLS certificates and keys from the TLS Secrets and writes them to the filesystem.

- The Control Loop generates and writes the NGINX configuration files, which correspond to the Ingress resource, and writes them to the filesystem.

- The Control Loop reloads NGINX and waits for NGINX to successfully reload. As part of the reload:

- NGINX reads the TLS certs and keys.

- NGINX reads the configuration files.

- The Control Loop emits an event for the Ingress resource and updates its status. If the reload fails, the event includes the error message.

NGINX Ingress Controller is a Kubernetes controller

With the context from the previous sections, we can generalize how NGINX Ingress Controller works:

NGINX Ingress Controller constantly processes both new resources and changes to the existing resources in the cluster. As a result, the NGINX configuration stays up-to-date with the resources in the cluster.

NGINX Ingress Controller is an example of a Kubernetes Controller: NGINX Ingress Controller runs a control loop that ensures NGINX is configured according to the desired state (Ingresses and other resources).

The desired state is based on the following built-in Kubernetes resources and Custom Resources (CRs):

- Layer 7 Load balancing configuration:

- Ingresses

- VirtualServers (CR)

- VirtualServerRoutes (CR)

- Layer 7 policies:

- Policies (CR)

- Layer 4 load balancing configuration:

- TransportServers (CR)

- Service discovery:

- Services

- Endpoints

- Pods

- Secret configuration:

- Secrets

- Global Configuration:

- ConfigMap (only one resource)

- GlobalConfiguration (CR, only one resource)

NGINX Ingress Controller can watch additional Custom Resources, which are less common and not enabled by default:

- NGINX App Protect resources (APPolicies, APLogConfs, APUserSigs)

- IngressLink resource (only one resource)

NGINX Ingress Controller process components

In this section, we describe the components of the NGINX Ingress Controller process and how they interact, including:

- How NGINX Ingress Controller watches for resources changes.

- The main components of the NGINX Ingress Controller Control Loop.

- How those components process a resource change.

- Additional components that are crucial for processing changes.

NGINX Ingress Controller is written in Go and relies heavily on the Go client for Kubernetes. Where relevant, we include links to the source code on GitHub.

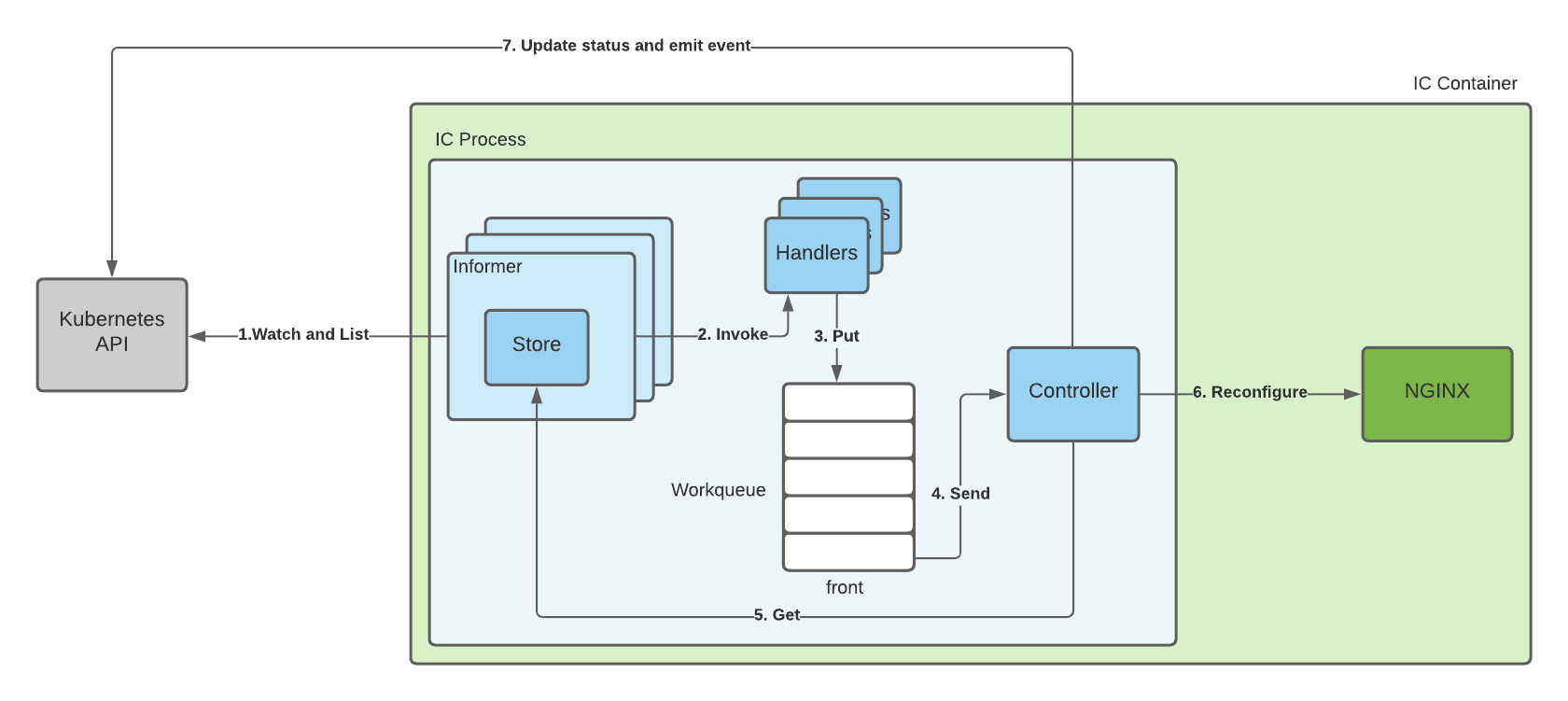

Resource caches

In an earlier section, Processing a New Ingress Resource, we mentioned that NGINX Ingress Controller has a cache of the resources in the cluster that stays in sync with the Kubernetes API by watching them for changes.

We also mentioned that once the cache is updated, it notifies the control loop about the changed resources. The cache is actually a collection of informers. The following diagram shows how changes to resources are processed by NGINX Ingress Controller.

- For every resource type that NGINX Ingress Controller monitors, it creates an Informer. The Informer includes a Store that holds the resources of that type. To keep the Store in sync with the latest versions of the resources in the cluster, the Informer calls the Watch and List Kubernetes APIs for that resource type (see the arrow 1. Watch and List on the diagram).

- When a change happens in the cluster (for example, a new resource is created), the Informer updates its Store and invokes Handlers (See the arrow 2. Invoke) for that Informer.

- NGINX Ingress Controller registers Handlers for every Informer. Most of the time, a Handler creates an entry for the affected resource in the Workqueue where a workqueue element includes the type of the resource and its namespace and name (See the arrow 3. Put).

- The Workqueue always tries to drain itself: if there is an element at the front, the queue will remove the element and send it to the Controller by calling a callback function (See the arrow 4. Send).

- The Controller is the primary component of NGINX Ingress Controller, which represents the Control Loop, explained in The Control Loop section. To process a workqueue element, the Controller component gets the latest version of the resource from the Store (See the arrow 5. Get), reconfigures NGINX according to the resource (See the arrow 6. Reconfigure*, updates the resource status, and emits an event via the Kubernetes API (See the arrow 7. Update status and emit event).

The control loop

This section discusses the main components of NGINX Ingress Controller, which comprise the control loop:

- Controller

- Runs the NGINX Ingress Controller control loop.

- Instantiates Informers, Handlers, the Workqueue and additional helper components.

- Includes the sync method), which is called by the Workqueue to process a changed resource.

- Passes changed resources to Configurator to re-configure NGINX.

- Configurator

- Generates NGINX configuration files, TLS and cert keys, and JWKs based on the Kubernetes resource.

- Uses Manager to write the generated files and reload NGINX.

- Manager

- Controls the lifecycle of NGINX (starting, reloading, quitting). See Reloading NGINX for more details about reloading.

- Manages the configuration files, TLS keys and certs, and JWKs.

The following diagram shows how the three components interact:

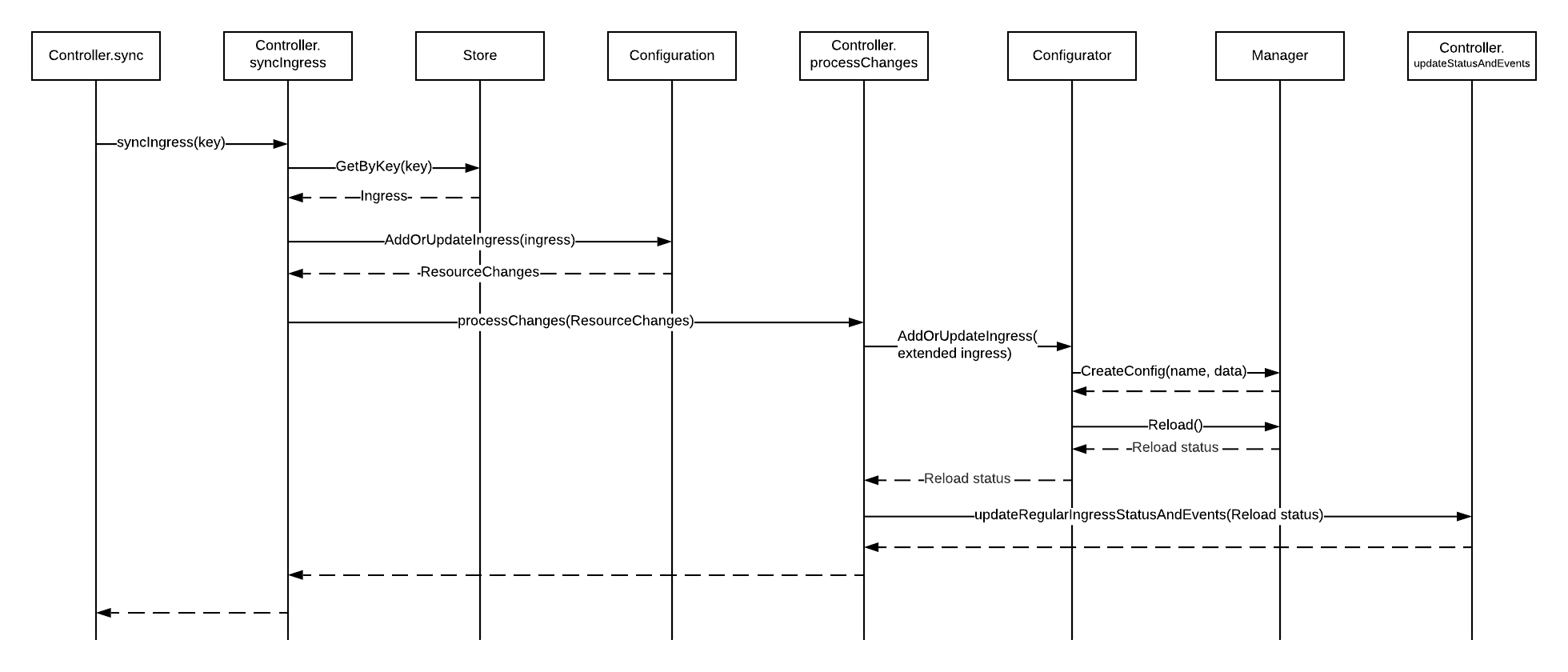

The Controller sync method

The Controller sync method is called by the Workqueue to process a change of a resource. The method determines the kind of the resource and calls the appropriate sync method (Such as syncIngress for Ingress resources).

To explain how the sync methods work, we will examine the most important one: the syncIngress method, and describe how it processes a new Ingress resource.

- The Workqueue calls the sync method and passes a workqueue element to it that includes the changed resource kind and key (The key is the resource namespace/name such as “default/cafe-ingress”).

- Using the kind, the sync method calls the appropriate sync method and passes the resource key. For Ingress resources, the method is syncIngress.

- syncIngress gets the Ingress resource from the Ingress Store using the key. The Store is controlled by the Ingress Informer. In the code, we use the helper storeToIngressLister type that wraps the Store.

- syncIngress calls AddOrUpdateIngress of the Configuration, passing the Ingress along. The Configuration is a component that represents a valid collection of load balancing configuration resources (Ingresses, VirtualServers, VirtualServerRoutes, TransportServers), ready to be converted to the NGINX configuration (see the Configuration section for more details). AddOrUpdateIngress returns a list of ResourceChanges, which must be reflected in the NGINX config. Typically, for a new Ingress resource, the Configuration returns only a single ResourceChange.

- syncIngress calls processChanges, which processes the single Ingress ResourceChange.

- processChanges creates an extended Ingress resource (IngressEx) that includes the original Ingress resource and its dependencies, such as Endpoints and Secrets, to generate the NGINX configuration. For simplicity, we don’t show this step on the diagram.

- processChanges calls AddOrUpdateIngress of the Configurator and passes the extended Ingress resource.

- Configurator generates an NGINX configuration file based on the extended Ingress resource, then:

- Calls Manager’s CreateConfig() to update the config for the Ingress resource.

- Calls Manager’s Reload() to reload NGINX.

- The reload status is propagated from Manager to processChanges, and is either a success or a failure with an error message.

- processChanges calls updateRegularIngressStatusAndEvent to update the status of the Ingress resource and emit an event with the status of the reload: both make an API call to the Kubernetes API.

Additional notes:

- Many details are not included for conciseness: the source code provides the most granular detail.

- The syncVirtualServer, syncVirtualServerRoute, and syncTransportServer methods are similar to syncIngress, while other sync methods are different. However, those methods typically find the affected Ingress, VirtualServer, and TransportServer resources and regenerate the configuration for them.

- The Workqueue has only a single worker thread that calls the sync method synchronously, meaning the Control Loop processes only one change at a time.

Helper components

There are two additional helper components crucial for processing changes: Configuration and LocalSecretStore.

Configuration

Configuration holds the latest valid state of the NGINX Ingress Controller load balancing configuration resources: Ingresses, VirtualServers, VirtualServerRoutes, TransportServers, and GlobalConfiguration.

The Configuration supports add, update and delete operations on the resources. When you invoke these operations on a resource in the Configuration, it performs the following:

- Validates the object (For add or update)

- Calculates the changes to the affected resources that are necessary to propagate to the NGINX configuration, returning the changes to the caller.

For example, when you add a new Ingress resource, the Configuration returns a change requiring NGINX Ingress Controller to add the configuration for that Ingress to the NGINX configuration files. If you made an existing Ingress resource invalid, the Configuration returns a change requiring NGINX Ingress Controller to remove the configuration for that Ingress from the NGINX configuration files.

Additionally, the Configuration ensures that only one Ingress/VirtualServer/TransportServer (TLS Passthrough) resource holds a particular host (For example, cafe.example.com) and only one TransportServer (TCP/UDP) holds a particular listener (Such as port 53 for UDP). This ensures that no host or listener collisions happen in the NGINX configuration.

Ultimately, NGINX Ingress Controller ensures the NGINX config on the filesystem reflects the state of the objects in the Configuration at any point in time.

LocalSecretStore

LocalSecretStore (of the SecretStore interface) holds the valid Secret resources and keeps the corresponding files on the filesystem in sync with them. Secrets are used to hold TLS certificates and keys (type kubernetes.io/tls), CAs (nginx.org/ca), JWKs (nginx.org/jwk), and client secrets for an OIDC provider (nginx.org/oidc).

When Controller processes a change to a configuration resource like Ingress, it creates an extended version of a resource that includes the dependencies (Such as Secrets) necessary to generate the NGINX configuration. LocalSecretStore allows Controller to reference the filesystem for a secret using the secret key (namespace/name).

Reloading NGINX

The following sections describe how NGINX reloads and how NGINX Ingress Controller specifically affects this process.

How NGINX reloads work

Reloading NGINX is necessary to apply new configuration changes and occurs with these steps:

- The administrator sends a HUP (hangup) signal to the NGINX master process to trigger a reload.

- The master process brings down the worker processes with the old configuration and starts worker processes with the new configuration.

- The administrator verifies the reload has successfully finished.

The NGINX documentation has more details about reloading.

How to reload NGINX and confirm success

The NGINX binary (nginx) supports the reload operation with the -s reload option. When you run this option:

- It validates the new NGINX configuration and exits if it is invalid printing the error messages to the stderr.

- It sends a HUP signal to the NGINX master process and exits.

As an alternative, you can send a HUP signal to the NGINX master process directly.

Once the reload operation has been invoked with nginx -s reload, there is no wait period for NGINX to finish reloading. This means it is the responsibility of an administator to check it is finished, for which there are a few options:

- Check if the master process created new worker processes. Two ways are by running

psor reading the/procfile system. - Send an HTTP request to NGINX, to see if a new worker process responds. This signifies that NGINX reloaded successfully: this method requires additional NGINX configuration, explained below.

NGINX reloads take roughly 200ms. The factors affecting reload time are configuration size and details, the number of TLS certificates/keys, enabled modules, and available CPU resources.

Potential problems

Most of the time, if nginx -s reload executes, the reload will also succeed. In the rare case a reload fails, the NGINX master process will print the an error message. This is an example:

2022/07/09 00:56:42 [emerg] 1353#1353: limit_req "one" uses the "$remote_addr" key while previously it used the "$binary_remote_addr" key

The operation is graceful; reloading doesn’t lead to any traffic loss by NGINX. However, frequent reloads can lead to high memory utilization and potential OOM (Out-Of-Memory) errors, resulting in traffic loss. This can most likely happen if you (1) proxy traffic that utilizes long-lived connections (ex: Websockets, gRPC) and (2) reload frequently. In these scenarios, you can end up with multiple generations of NGINX worker processes that are shutting down which will force old workers to shut down after the timeout). Eventually, all those worker processes can exhaust the system’s available memory.

Old NGINX workers will not shut down until all connections are terminated either by clients or backends, unless you configure worker_shutdown_timeout. Since both the old and new NGINX worker processes coexist during a reload, reloading can lead to two spikes in memory utilization. With a lack of available memory, the NGINX master process can fail to create new worker processes.

Reloading in NGINX Ingress Controller

NGINX Ingress Controller reloads NGINX to apply configuration changes.

To facilitate reloading, NGINX Ingress Controller configures a server listening on the Unix socket unix:/var/lib/nginx/nginx-config-version.sock that responds with the configuration version for /configVersion URI. NGINX Ingress Controller writes the configuration to /etc/nginx/config-version.conf.

Reloads occur with this sequence of steps:

- NGINX Ingress Controller updates generated configuration files, including any secrets.

- NGINX Ingress Controller updates the config version in

/etc/nginx/config-version.conf. - NGINX Ingress Controller runs

nginx -s reload. If the command fails, NGINX Ingress Controller logs the error and considers the reload failed. - If the command succeeds, NGINX Ingress Controller periodically checks for the config version by sending an HTTP request to the config version server on

unix:/var/lib/nginx/nginx-config-version.sock. - Once NGINX Ingress Controller sees the correct config version returned by NGINX, it considers the reload successful. If it doesn’t see the correct configuration version after the configurable timeout (

-nginx-reload-timeout), NGINX Ingress Controller considers the reload failed.

The NGINX Ingress Controller Control Loop stops during a reload so that it cannot affect configuration files or reload NGINX until the current reload succeeds or fails.

When NGINX Ingress Controller reloads NGINX

NGINX Ingress Controller reloads NGINX every time the Control Loop processes a change that affects the generated NGINX configuration. In general, every time a monitored resource is changed, NGINX Ingress Controller will regenerate the configuration and reload NGINX.

There are three special cases:

- Start. When NGINX Ingress Controller starts, it processes all resources in the cluster and only then reloads NGINX. This avoids a “reload storm” by reloading only once.

- Batch updates. When NGINX Ingress Controller receives a number of concurrent requests from the Kubernetes API, it will pause NGINX reloads until the task queue is empty. This reduces the number of reloads to minimize the impact of batch updates and reduce the risk of OOM (Out of Memory) errors.

- NGINX Plus. If NGINX Ingress Controller is using NGINX Plus, it will not reload NGINX Plus for changes to the Endpoints resources. In this case, NGINX Ingress Controller will use the NGINX Plus API to update the corresponding upstreams and skip reloading.